It can be pretty convenient when Facebook processes the gargantuan amount of personal data it has on you to show you ads for the precise lemon curd recipe you never knew you were craving.

But what are the harms associated with this kind of targeting? It’s hard to answer that question — because an overbroad law actually prohibits the kind of studies best positioned to figure it out.

The implications go far beyond dessert — studies have shown that people are being treated differently online based on their race, actual or perceived. Websites have been found to use demographic data to raise or lower prices, show different advertisements, or steer people to different content.

The consequences are real. Big data has resulted in people of color, or people who live in communities of color, paying more for car insurance and being more likely to see ads for predatory loans.

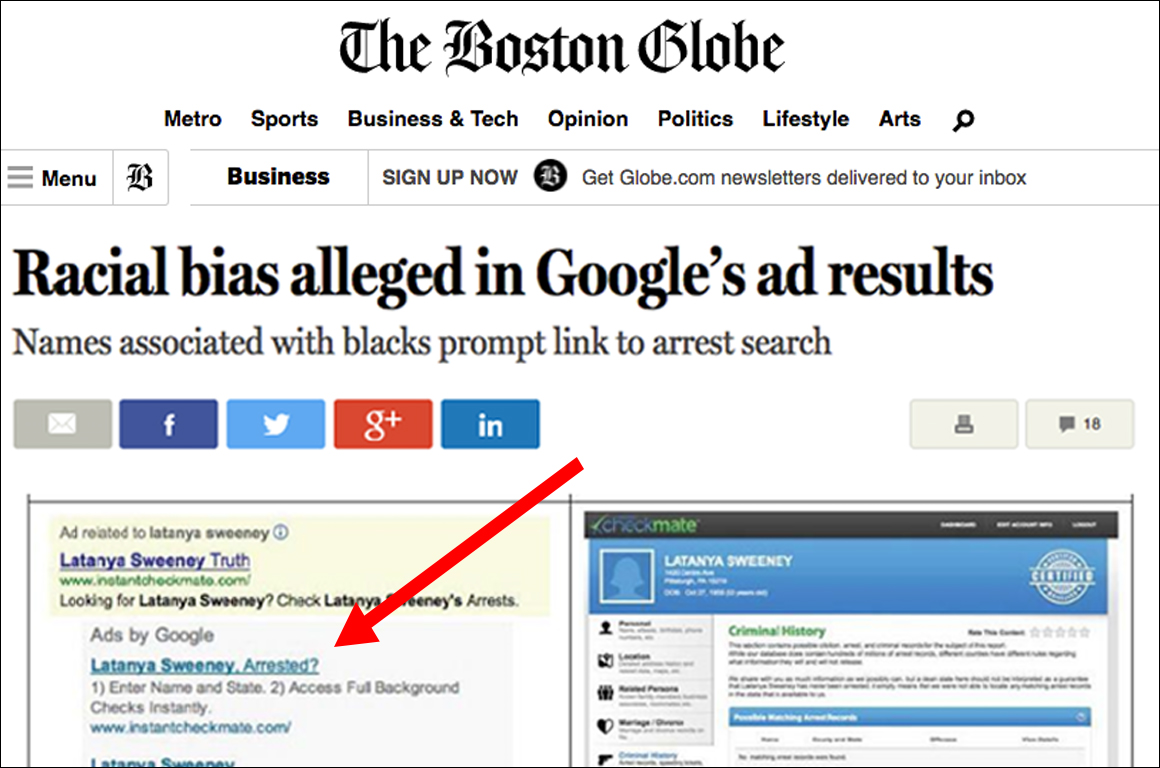

One recent study by Harvard computer scientist Latanya Sweeney found that searches for names typically associated with Black people were more likely to bring up ads for criminal records.

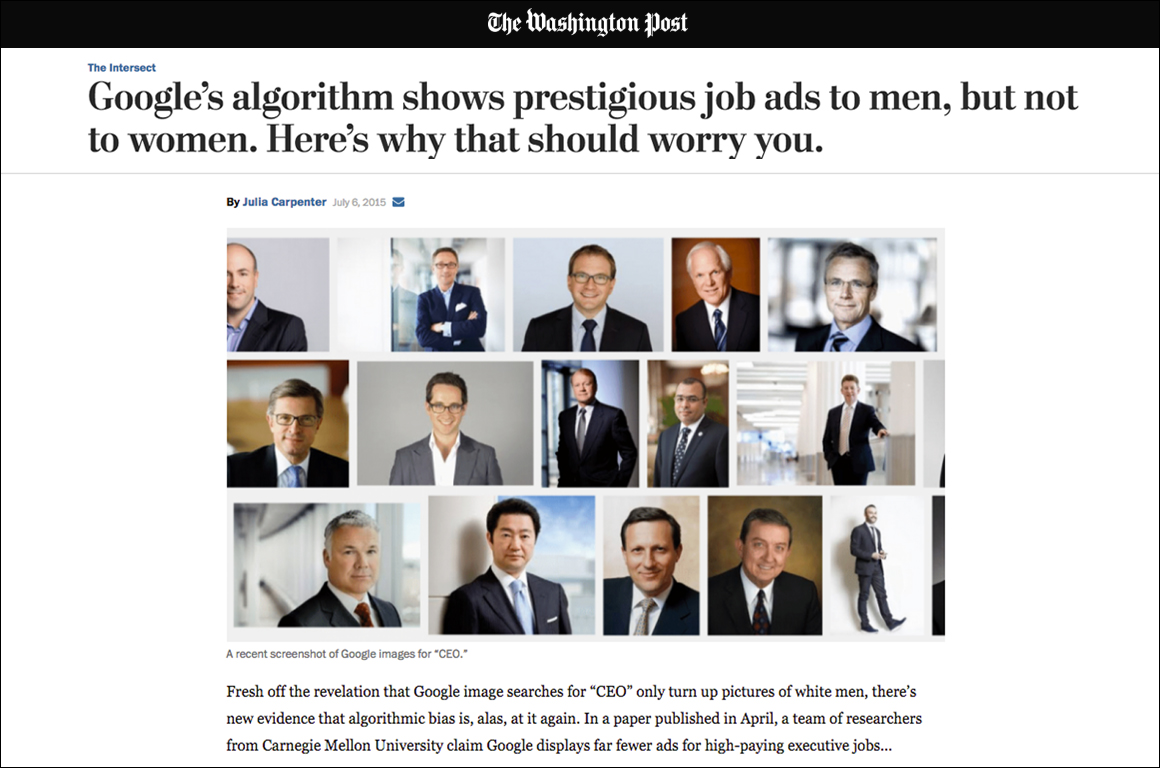

Another study found that Google showed ads for higher-paying executive jobs to users it presumed to be men.

These examples are likely just the tip of the iceberg — but there’s so much we don’t know because the algorithms that advertisers use to target internet users are secret, as are the detailed profiles that big data brokers amass on ordinary people (and then sell to those advertisers).

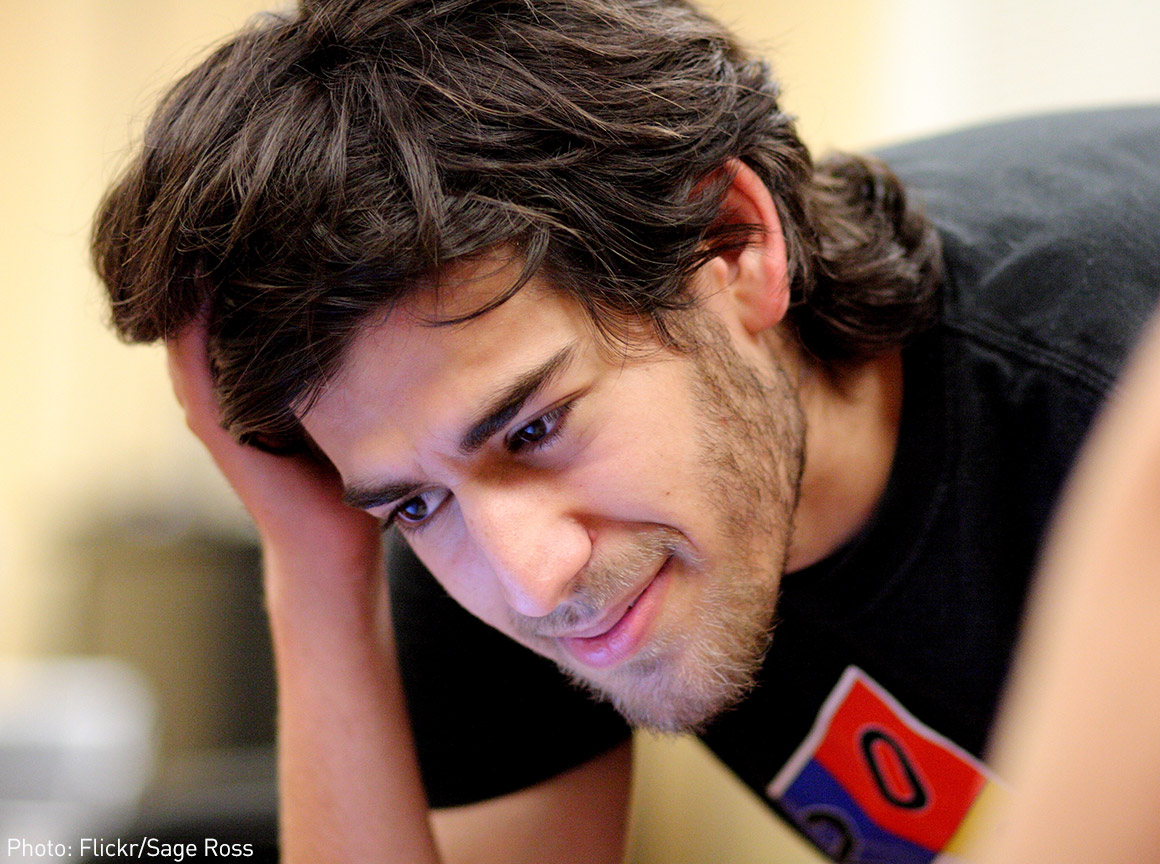

To make matters more complicated, a law called the Computer Fraud and Abuse Act makes it illegal to do much of the work that’s required to uncover discriminatory practices online. The CFAA creates severe civil and criminal penalties for people who violate websites’ “terms of service” — all the fine print you never actually read that websites make you agree to. Internet activist Aaron Swartz was charged under that law and faced decades in prison before he took his own life in 2013.

Aaron Swartz

Many terms of service governing the use of a website prohibit the use of automated technology and the creation of fake user profiles, which are the kinds of methods researchers rely on to audit algorithms and publish their results. If they can’t do that kind of research, we can’t know whether the algorithms that deliver content are discriminating against us.

Is algorithmic discrimination the redlining of the 21st century?

For much of the 20th century, the federal government refused to guarantee mortgages in communities where people of color lived, and banks refused to lend in these neighborhoods. These neighborhoods were literally outlined in red on maps, hence the term redlining.

Families of color still feel the impact of being denied mortgages for decades. In general, they have inherited less wealth because the absence of credit meant homes in these neighborhoods were worth less, and these homes continue to be worth less, contributing to the racial wealth gap. As a result, they have also been denied access to high quality schools, adequate transportation, employment, and environmentally safe neighborhoods.

The Fair Housing Act of 1968 made redlining, along with other forms of housing discrimination, illegal. To make sure landlords and real estate agents are following the law, fair housing testers of different races apply for housing, seeking to make sure that testers of color are treated the same as white testers.

Just as offline fair housing testing is crucial for discovering housing discrimination, robust online testing will be necessary to ensure that these protections are extended to the internet, too. Government agencies should require websites — especially housing providers, employers, and lenders — to audit themselves to ensure that they are not discriminating and should encourage researchers to regularly test and monitor algorithms to be sure they’re treating everyone fairly. It is outrageous that the CFAA prohibits so much of this work. It’s also unnecessary — it’s entirely possible to permit civil rights testing without opening the door to fraud.

The ACLU is challenging the CFAA to ensure that researchers and journalists aren’t thwarted from pursuing valuable research and investigations to determine whether inequality is baked into the algorithms that increasingly govern our world.

The next generation of civil rights testing will need to happen online. Without this kind of research, we’ll have no way of knowing whether websites are selling or advertising goods and services in a way that exacerbates existing racial disparities.

For more on the Computer Fraud and Abuse Act, read our Free Future post, "ACLU Challenges Computer Crimes Law That is Thwarting Research on Discrimination Online."